Everywhere you go these days, you hear about deep learning’s impressive advancements. New deep learning libraries, tools, and products get announced on a regular basis, making the average data scientist feel like they’re missing out if they don’t hop on the deep learning bandwagon. However, as Kamil Bartocha put it in his post The Inconvenient Truth About Data Science, 95% of tasks do not require deep learning. This is obviously a made up number, but it’s probably an accurate representation of the everyday reality of many data scientists. This post discusses an often-overlooked area of study that is of much higher relevance to most data scientists than deep learning: causality.

Causality is everywhere

An understanding of cause and effect is something that is not unique to humans. For example, the many videos of cats knocking things off tables appear to exemplify experimentation by animals. If you are not familiar with such videos, it can easily be fixed. The thing to notice is that cats appear genuinely curious about what happens when they push an object. And they tend to repeat the experiment to verify that if you push something off, it falls to the ground.

Humans rely on much more complex causal analysis than that done by cats – an understanding of the long-term effects of one’s actions is crucial to survival. Science, as defined by Wikipedia, is a systematic enterprise that creates, builds and organizes knowledge in the form of testable explanations and predictions about the universe. Causal analysis is key to producing explanations and predictions that are valid and sound, which is why understanding causality is so important to data scientists, traditional scientists, and all humans.

What is causality?

It is surprisingly hard to define causality. Just like cats, we all have an intuitive sense of what causality is, but things get complicated on deeper inspection. For example, few people would disagree with the statement that smoking causes cancer. But does it cause cancer immediately? Would smoking a few cigarettes today and never again cause cancer? Do all smokers develop cancer eventually? What about light smokers who live in areas with heavy air pollution?

Samantha Kleinberg summarises it very well in her book, Why: A Guide to Finding and Using Causes:

While most definitions of causality are based on Hume’s work, none of the ones we can come up with cover all possible cases and each one has counterexamples another does not. For instance, a medication may lead to side effects in only a small fraction of users (so we can’t assume that a cause will always produce an effect), and seat belts normally prevent death but can cause it in some car accidents (so we need to allow for factors that can have mixed producer/preventer roles depending on context).

The question often boils down to whether we should see causes as a fundamental building block or force of the world (that can’t be further reduced to any other laws), or if this structure is something we impose. As with nearly every facet of causality, there is disagreement on this point (and even disagreement about whether particular theories are compatible with this notion, which is called causal realism). Some have felt that causes are so hard to find as for the search to be hopeless and, further, that once we have some physical laws, those are more useful than causes anyway. That is, “causes” may be a mere shorthand for things like triggers, pushes, repels, prevents, and so on, rather than a fundamental notion.

It is somewhat surprising, given how central the idea of causality is to our daily lives, but there is simply no unified philosophical theory of what causes are, and no single foolproof computational method for finding them with absolute certainty. What makes this even more challenging is that, depending on one’s definition of causality, different factors may be identified as causes in the same situation, and it may not be clear what the ground truth is.

Why study causality now?

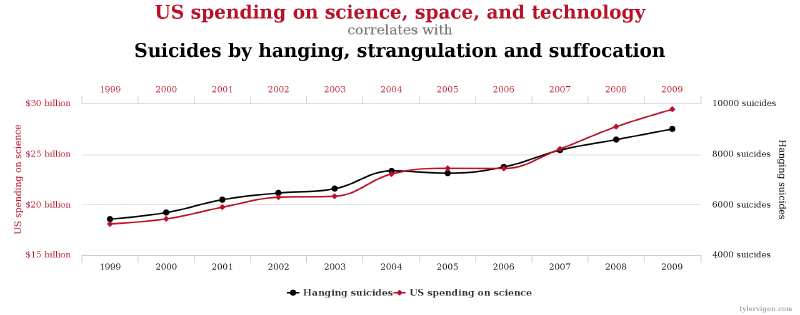

While it’s hard to conclusively prove, it seems to me like interest in formal causal analysis has increased in recent years. My hypothesis is that it’s just a natural progression along the levels of data’s hierarchy of needs. At the start of the big data boom, people were mostly concerned with storing and processing large amounts of data (e.g., using Hadoop, Elasticsearch, or your favourite NoSQL database). Just having your data flowing through pipelines is nice, but not very useful, so the focus switched to reporting and visualisation to extract insights about what happened (commonly known as business intelligence). While having a good picture of what happened is great, it isn’t enough – you can make better decisions if you can predict what’s going to happen, so the focus switched again to predictive analytics. Those who are familiar with predictive analytics know that models often end up relying on correlations between the features and the predicted labels. Using such models without considering the meaning of the variables can lead us to erroneous conclusions, and potentially harmful interventions. For example, based on the following graph we may make a recommendation that the US government decrease its spending on science to reduce the number of suicides by hanging.

Causal analysis aims to identify factors that are independent of spurious correlations, allowing stakeholders to make well-informed decisions. It is all about getting to the top of the DIKW (data-information-knowledge-wisdom) pyramid by understanding why things happen and what we can do to change the world. However, finding true causes can be very hard, especially in cases where you can’t perform experiments. Judea Pearl explains it well:

We know, from first principles, that any causal conclusion drawn from observational studies must rest on untested causal assumptions. Cartwright (1989) named this principle ‘no causes in, no causes out,’ which follows formally from the theory of equivalent models (Verma and Pearl, 1990); for any model yielding a conclusion C, one can construct a statistically equivalent model that refutes C and fits the data equally well.

What this means in practice is that you can’t, for example, conclusively prove that smoking causes cancer without making some reasonable assumptions about the mechanisms at play. For ethical reasons, we can’t perform a randomly controlled trial where a test group is forced to smoke for years while a control group is forced not to smoke. Therefore, our conclusions about the causal link between smoking and cancer are drawn from observational studies and an understanding of the mechanisms by which various cancers develop (e.g., the effect of cigarette smoke on individual cells can be studied without forcing people to smoke). Cancer Tobacco companies have exploited this fact for years, making the claim that the probability of both cancer and smoking is raised by some mysterious genetic factors. Fossil fuel and food companies use similar arguments to sell their products and block attempts to regulate their industries (as discussed in previous posts on the hardest parts of data science and nutritionism). Fighting against such arguments is an uphill battle, as it is easy to sow doubt with a few simplistic catchphrases, while proving and communicating causality to laypeople is much harder (or impossible when it comes to deeply-held irrational beliefs).

My causality journey is just beginning

My interest in formal causal analysis was seeded a couple of years ago, with a reading group that was dedicated to Judea Pearl’s work. We didn’t get very far, as I was a bit disappointed with what causal calculus can and cannot do. This may have been because I didn’t come in with the right expectations – I expected a black box that automatically finds causes. Recently reading Samantha Kleinberg’s excellent book Why: A Guide to Finding and Using Causes has made my expectations somewhat more realistic:

Thousands of years after Aristotle’s seminal work on causality, hundreds of years after Hume gave us two definitions of it, and decades after automated inference became a possibility through powerful new computers, causality is still an unsolved problem. Humans are prone to seeing causality where it does not exist and our algorithms aren’t foolproof. Even worse, once we find a cause it’s still hard to use this information to prevent or produce an outcome because of limits on what information we can collect and how we can understand it. After looking at all the cases where methods haven’t worked and researchers and policy makers have gotten causality really wrong, you might wonder why you should bother.

[…]

Rather than giving up on causality, what we need to give up on is the idea of having a black box that takes some data straight from its source and emits a stream of causes with no need for interpretation or human intervention. Causal inference is necessary and possible, but it is not perfect and, most importantly, it requires domain knowledge.

Kleinberg’s book is a great general intro to causality, but it intentionally omits the mathematical details behind the various methods. I am now ready to once again go deeper into causality, perhaps starting with Kleinberg’s more technical book, Causality, Probability, and Time. Other recommendations are very welcome!

Cover image source: xkcd: Correlation

Public comments are closed, but I love hearing from readers. Feel free to contact me with your thoughts.

chewiebeans

2016-02-15 01:03:41

willw9

2016-02-15 15:31:12

Jim Savage

2016-02-15 03:04:50

Hey Yanir - great post.

If you’ve not already, you should read Mostly Harmless Econometrics. They take quite a different approach to causality than Pearl (though there is a lot of conceptual overlap). It definitely helps build intuition for the topic. It’s also worth reading the relevant mid-70s papers from Rubin.

Yanir Seroussi

2016-02-15 08:20:06

Will Lowe (@conjugateprior)

2016-08-02 02:32:44

M Edward/Ed Borasky (@znmeb)

2016-02-15 10:01:19

Joanna

2016-02-15 16:01:01

Kyle Gagnon

2016-02-16 01:33:53

Boris Gorelik

2016-02-18 09:56:34

Yanir Seroussi

2016-02-18 20:42:41

I agree that in many cases the reasoning behind models isn’t interesting, as long as the models produce satisfactory results. Web search is actually a good example. Yes, many end users don’t really care how Google ranks pages, but SEO practitioners go to great lengths to understand search algorithms and get pages to rank well (see https://moz.com/search-ranking-factors for example).

As data scientists, it’s important to consider model stability in production. Sculley et al. said it well in their paper on machine learning technical debt (http://static.googleusercontent.com/media/research.google.com/en//pubs/archive/43146.pdf): “Machine learning systems often have a difficult time distinguishing the impact of correlated features. This may not seem like a major problem: if two features are always correlated, but only one is truly causal, it may still seem okay to ascribe credit to both and rely on their observed co-occurrence. However, if the world suddenly stops making these features co-occur, prediction behavior may change significantly.”

Finally, in many cases what we really care about is interventionality. I don’t think it’s a real word, but what it means is that you don’t really care whether A causes B, you want to know whether intervening to change A would change B. These inferences are critical in fields like medicine and marketing, but we can look at an example from the world of blogging, which is probably more relevant to you. Many bloggers would like to attract more readers. A possible costly intervention would be to switch platforms from WordPress to Medium. Cheaper interventions may be changing the site’s layout, writing titles that get people interested, and posting links to your content on relevant channels. Another intervention would be trying to post at different times (as implied by WordPress insights and discussed in http://yanirseroussi.com/2015/12/08/this-holiday-season-give-me-real-insights/). Obviously, one would like to apply the interventions with the highest return on investment first, and data that helps with ranking the interventions is very interesting.

Greg Gandenberger

2016-02-18 15:53:34

Yanir Seroussi

2016-02-18 18:56:04

Joel Kreager

2016-02-18 18:58:51

Yanir Seroussi

2016-02-20 09:41:25

Greg Gandenberger

2016-02-18 20:02:05

Causation, Prediction, and Search is also seminal (https://www.cs.cmu.edu/afs/cs.cmu.edu/project/learn-43/lib/photoz/.g/scottd/fullbook.pdf).

Disclosure: I did my PhD just down the street from the authors of Causation, Prediction, and Search, and Woodward was on my thesis committee.

Greg Gandenberger

2016-02-18 20:12:58

There is a subtle difference between Woodward’s approach and that of Pearl and of Spirtes et al., which Glymour discusses in the following places:

https://www.ncbi.nlm.nih.gov/pubmed/24887161 http://repository.cmu.edu/cgi/viewcontent.cgi?article=1280&context=philosophy

Basically, Woodward starts with the notion of an intervention on a variable and defines other concepts (e.g. direct cause) in terms of it, whereas Pearl and Spirtes et al. start with the notion of direct cause. One consequence of this difference is that properties like sex and race that cannot be intervened upon in a straightforward way cannot be causes for Woodward, strictly speaking, but can be for Pearl and Spirtes et al. This is a fine point, however, and it’s very nearly true that they simply provide alternative formulations of the same theory, with Woodward focusing on conceptual issues and the others focus on methodology.

Yanir Seroussi

2016-02-20 09:26:23

jasonhand24

2016-02-19 15:12:30

Yanir Seroussi

2016-02-19 21:44:31

jozvison

2016-02-25 04:59:57

Jerome

2016-02-25 17:28:47

Yanir Seroussi

2016-02-25 19:49:34

Félix Balazard

2016-08-01 19:05:52

urishin

2016-08-01 21:04:59

Great writeup! I think causality will become something that data scientists will need to acknowledge and think about more explicitly.

Coming from machine learning, it took me a while to wrap my head around the subtle but important differences in the way similar ideas are used in prediction vs. causal inference.

Recently I gave an ICML tutorial about causality, together with David Sontag. This might be of interest to your readers as a starting point, particulalry for people who are well-versed in ML. It’s here: www.cs.nyu.edu/~shalit/tutorial.html

brandonrohrer

2016-08-02 00:23:52

Qi

2016-08-08 20:38:55

Digital Cosmology (@DCosmology)

2016-12-08 16:17:50

“According to Nicolas Malebranche and other seventeenth century Cartesian occasionalists, what we actually call causes are really no more than occasions on which, in accordance with his own laws, God acts to bring about the effect. If one were to replace the notion of God in the occasionalism doctrine by the notion of a mechanism, then a modern (ormechanical) occasionalist could assert that what we actually call causes are no more than occasions on which a mechanism acts to bring about the effect. "

More info here:http://fqxi.org/community/forum/topic/846 and here http://www.digitalcosmology.com/Blog/beyond-intelligent-design/

Jason Vondersmith

2017-02-27 17:49:55

david

2017-12-03 13:06:21

Hi Yanir,

Great post. If you are serious abut exploring causality further: check out Transfer Entropy and related information theory inspired measures: I assure you, you will be amazed.

Yanir Seroussi

2017-12-03 20:32:53